DDPM-AFHQ: Implementing Denoising Diffusion Probabilistic Models on AFHQ Dataset

Date:

Generative Models, Diffusion Models, Computer Vision, U-Net Implementation

DDPM-AFHQ: End-to-End Diffusion Model Implementation

This project implements a full Denoising Diffusion Probabilistic Model (DDPM) using the AFHQ dataset (cats subset). The work follows the modern diffusion modeling pipeline, covering the forward process, reverse denoising process, cosine noise schedule, U-Net denoiser, training with L1 noise prediction loss, and visualization of both forward and backward diffusion. All training experiments—including FID logging, progressive sampling quality, and full 10k-step training—were completed on a A100 GPU.

Diffusion Forward & Reverse Processes

The forward process gradually adds Gaussian noise:

\(x_t = \sqrt{\bar{\alpha}_t} x_0 + \sqrt{1-\bar{\alpha}_t}\epsilon, \qquad \epsilon \sim \mathcal{N}(0,I)\)

The reverse process uses the U-Net to predict $\epsilon_\theta(x_t,t)$ and reconstruct $x_0$ through the posterior mean:

\(\tilde{\mu}_t = \frac{\sqrt{\alpha_t}(1-\bar{\alpha}_{t-1})}{1-\bar{\alpha}_t} x_t + \frac{\sqrt{\bar{\alpha}_{t-1}}(1-\alpha_t)}{1-\bar{\alpha}_t} \hat{x}_0\)

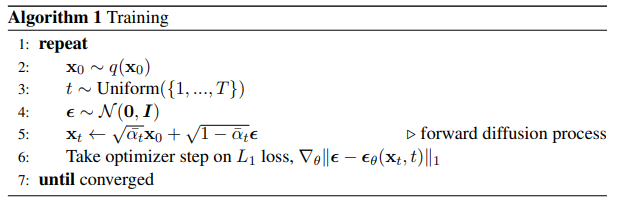

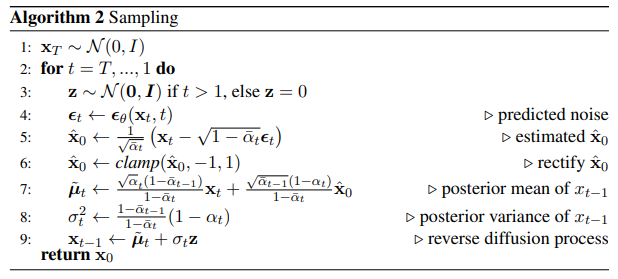

Forward Diffusion (PDF Figure)

Figure 1: Forward diffusion process (noise increases gradually)

Figure 1: Forward diffusion process (noise increases gradually)

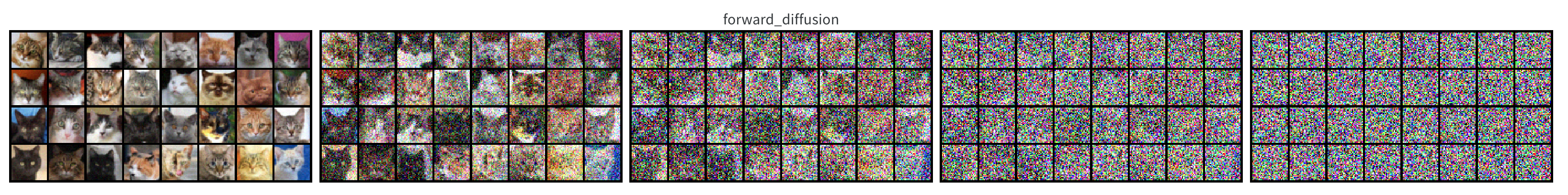

Reverse Diffusion (PDF Figure)

Figure 2: Reverse diffusion process (model reconstructs from noise)

Figure 2: Reverse diffusion process (model reconstructs from noise)

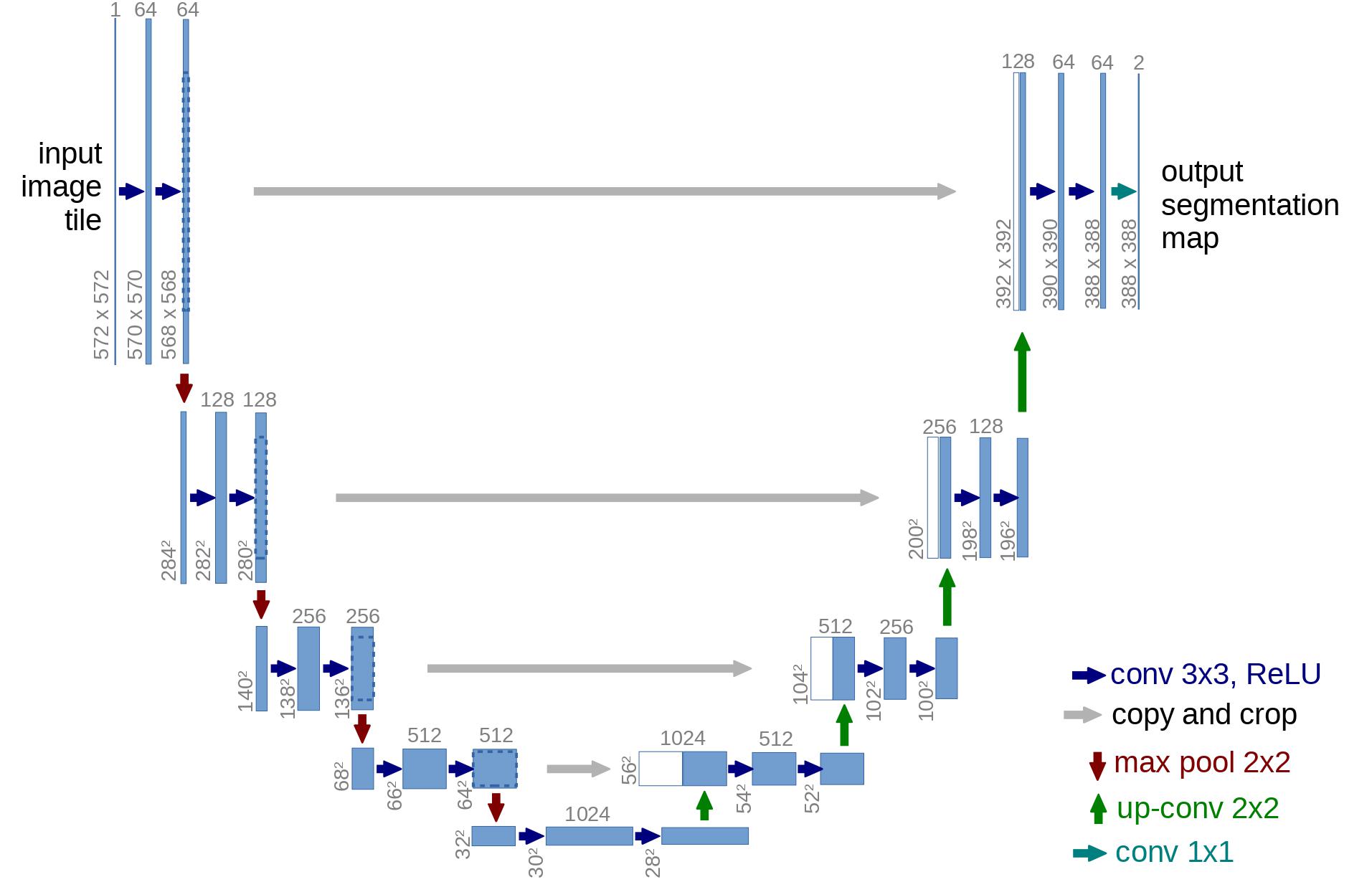

U-Net Noise Predictor

A lightweight U-Net was implemented with:

- Downsampling/upsampling blocks

- Skip connections

- GroupNorm + SiLU

- Time embedding injected into each residual block

- Middle-layer attention at low resolution

This network predicts the added noise $\epsilon_\theta(x_t,t)$, enabling reconstruction of $x_0$.

U-Net Diagram (PDF Figure)

Figure 3: U-Net architecture

Figure 3: U-Net architecture

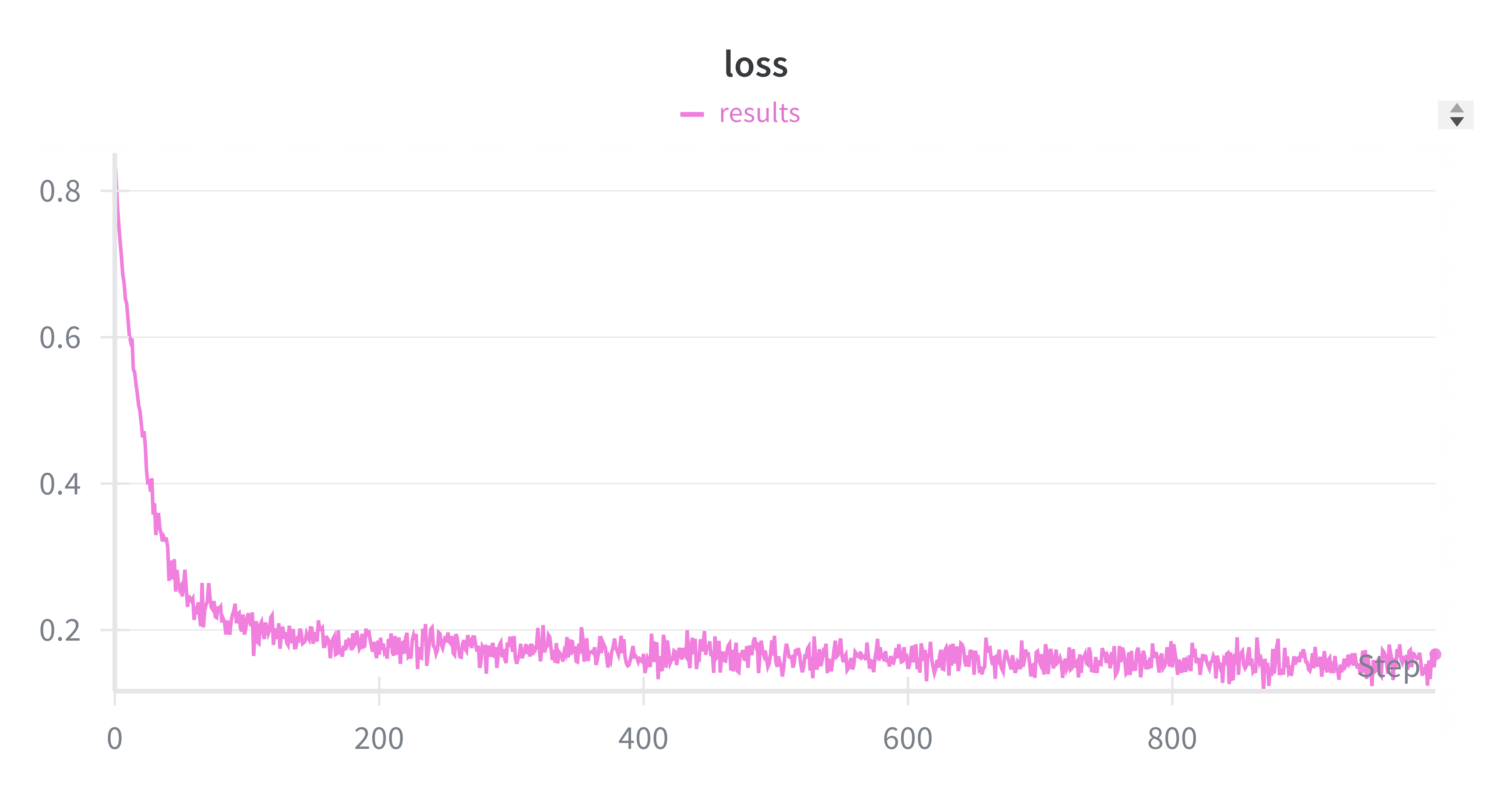

Training Pipeline & Configuration

Objective:

\(\mathcal{L} = \| \epsilon - \epsilon_\theta(x_t,t) \|_1\)

Setup:

- Dataset: AFHQ (cats)

- Timesteps: T = 50

- Optimizer: AdamW (1e-3)

- Batch size: 32

- Cosine noise schedule (Nichol & Dhariwal 2021)

- Hardware: Google Colab A100

Training Loss Curve (PDF Figure)

Figure 4: Training loss curve

Figure 4: Training loss curve

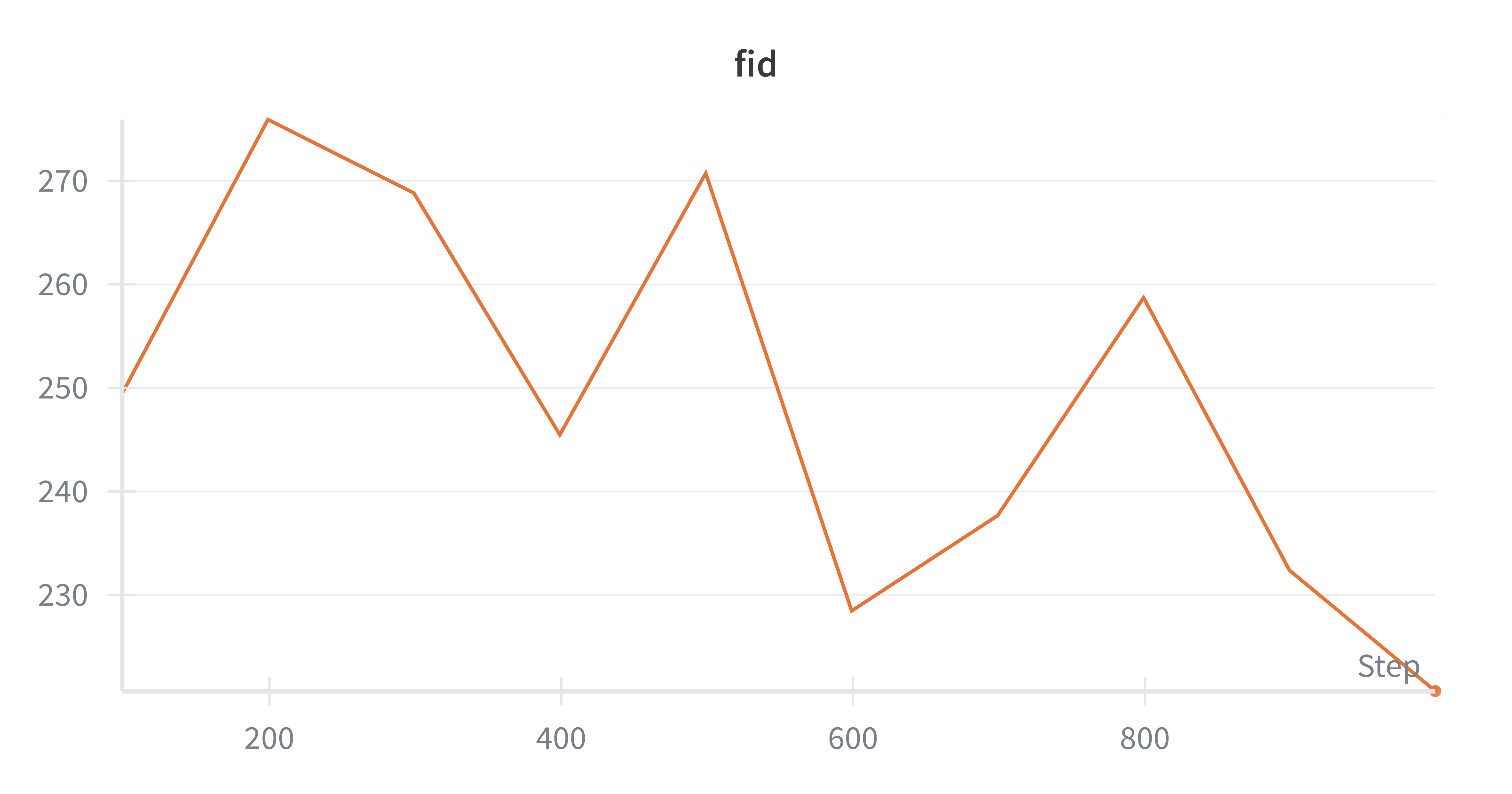

FID Tracking (PDF Figure)

Figure 5: FID progression over training steps

Figure 5: FID progression over training steps

My Role & Contributions

As the sole developer, I:

- Implemented the full forward diffusion process

- Implemented reverse process sampling (

p_sample,p_sample_loop,sample) - Built the U-Net noise prediction model

- Engineered full training pipeline and L1 noise prediction loss

- Logged FID and produced visualizations (forward, backward, final samples)

- Completed full 10k-step training and evaluation

- Extracted and documented all results shown above

This project refined my understanding of variational inference, generative modeling, and the finer dynamics of diffusion processes.